Access Granted

HUB CITY MEDIA EMPLOYEE BLOG

Ping Identity Verified Trust for Microsoft Entra

When it comes to Workforce Identity, Microsoft Entra has long been seen as a competitor to Ping Identity. After all, most organizations rely on Active Directory and Entra by default to run Windows machines, manage file shares, and access Microsoft Office and Office 365.

For years, customers often viewed the decision as either/or: choose Entra or choose Ping. If they went with Entra, we lost. If they went with Ping, we won.

That’s no longer the case.

Today, customers can choose Entra and still gain significant value from Ping by layering on Ping Universal Services like DaVinci, Protect, Verify, and MFA.

Organizations can combine Entra with Ping Universal Services to:

Deploy MFA to reduce account takeover risk

Use adaptive MFA to minimize unnecessary prompts and combat MFA fatigue

Integrate risk signals from third-party tools like ThreatMetrix or CrowdStrike via DaVinci, enabling stronger contextual security

Add identity verification where MFA alone isn’t enough

Together, these integrations deliver:

Lower risk through stronger authentication

Smarter security by evaluating risk signals and applying MFA only when necessary

Advanced orchestration for a smoother user experience and future-ready identity capabilities

Beyond security, this approach drives measurable cost savings and productivity improvements:

Lower license costs – Customers don’t need a costly Microsoft E5/P1 license. The necessary External Authentication Module (EAM) integration is already included in the more affordable Entra ID E3/P1 license1.

Fewer MFA interruptions – Customers leveraging Ping’s risk-based approach have seen a 65–89% reduction in MFA prompts. This boosts productivity while maintaining strong security.

Lower integration costs – With Ping DaVinci’s no-code orchestration, additional integrations and enhancements can be implemented quickly and at a fraction of the cost.

Anecdotally, some organizations have found this approach to be just 20% of the cost of upgrading to a full E5 license.

Flexibility and interoperability in IAM always benefit customers. With Ping Identity and Microsoft Entra working together, organizations can:

Strengthen defenses against account takeover

Reduce MFA fatigue with smarter, adaptive risk-based authentication

Simplify future integrations while keeping costs low

The result is a security posture that’s stronger, more user-friendly, and significantly more cost-efficient.

With Ping Identity and Microsoft Entra working together, organizations can unlock stronger security, reduce MFA fatigue, and cut costs — all while building a more flexible, future-ready IAM strategy.

Want to learn more?

Fill out the form below to explore how Ping Identity can enhance your Microsoft Entra environment and deliver immediate value to your organization.

What are Non-Human Identities?

These include, but are not limited to:

Service accounts – Used by applications or services for system interactions.

APIs and API keys – Facilitating machine-to-machine communication, often authenticated through API keys, OAuth tokens or certificates.

IoT devices – Internet-connected devices like sensors, smart appliances and cameras.

Virtual machines – Components that may require specific identities for accessing cloud resources.

Should you secure them? YES... Don’t know how to secure them?

WE CAN HELP!

Hub City Media offers a proven non-human account remediation program that uses custom tools and processes to address your non-human account vulnerabilities while minimizing risk.

Our non-human identity team has developed a low-risk, vendor-agnostic approach to mitigate your organization’s account risk based on industry standards.

Hub City Media’s proven program has been vetted through years of successful delivery covering numerous industries.

Let’s reduce your identity risk — without disrupting your operations.

Want to learn more? Leave a comment or use our Contact Form to connect with our Non-Human Identity team.

The Push for Passwordless: Bridging Strong Authentication and Legacy Systems

Discover how passwordless authentication, like FIDO Passkey, is poised to revolutionize online security and user experiences in our latest blog post. Learn why strong authentication methods are essential in combating cyberattacks, and explore solutions like ForgeRock Enterprise Connect Passwordless that bridge the gap for legacy applications and critical infrastructure. Don't wait for the next security breach – start crafting your strong authentication strategy today!

In a previous post I wrote about FIDO Passkey and how this technology will revolutionize how we authenticate to services in our personal and work lives. Strong passwordless authentication, like Passkey, can no doubt improve user experience and the security of our online accounts.

Strong authentication, passwordless, or phishing-resistant MFA are key technologies to preventing some of the most common cyberattacks. They block phishing and reduce account takeover, which accounts for a large percentage of all breaches. The cost of each breach has been increasing over the years and most estimates don’t include the damage to a company’s reputation and potential lost economic opportunity.

However, these strong authentication technologies often fall short of being compatible with all systems within an enterprise. The reality is that legacy applications make up a significant portion of systems within most companies. Application modernization is always underway but it can’t occur fast enough, but it’s not just applications that need strong authentication. We need to secure server access and other elements of infrastructure, like switches and routers, that are critical to any organization. Ideally, we’d be able to protect these resources with passwordless solutions or at least some type of phishing-resistant MFA. Sadly, FIDO Passkey can’t help us to secure these systems.

Fortunately, there are vendors that provide passwordless solutions that can be used to protect legacy applications and system infrastructure. One example is ForgeRock Enterprise Connect Passwordless. It supports:

Modern applications by using modern protocols like FIDO.

Protection of legacy technologies including databases, servers, and desktops via standard protocols like RADIUS.

Seamless integration to other ForgeRock access products in the cloud or on-premise.

Customization of the login experience to include functionality like risk-based step-up authentication.

Furthermore, ForgeRock Enterprise Connect Passwordless allows customers to phase in the solution over time. Customers can start with a small pilot and expand the solution to larger populations and more applications over time.

Passwordless, strong authentication and phishing-resistant MFA will be ubiquitous soon. These technologies are critical to blocking the most common attacks on enterprises by bad actors. App modernization will help accelerate the adoption of modern passwordless, like Passkey, but app modernization shouldn’t be seen as a blocker to adoption. Technologies exist today like ForgeRock Enterprise Connect Passwordless that can support legacy apps, provide better user experience, vastly improve security and are easy to deploy. Don’t wait for the next account compromise to start developing a strong authentication strategy. Identify vendors that can meet your use cases or work with a trusted partner that can help you develop a strategy, perform product evaluations, and choose a direction today.

A Few Thoughts About Identiverse 2023

For a week, Las Vegas played host to the digital identity elite at Identiverse 2023. This annual conference has been the beacon for anyone navigating the ever-evolving landscape of digital identity, be it for consumers, the workforce, or citizens. If digital identity holds any significance in your organization, this event is simply unmissable.

The conference was a treasure trove of enlightening sessions, and the insights gained were nothing short of remarkable. Here are my top three takeaways from Identiverse 2023:

Identiverse 2023 has come and gone. For one week the Identerati descended on the Aria Hotel in Las Vegas for a week-long exploration of all things related to digital identity. For over a decade, Identiverse has been the preeminent conference to learn the latest information on digital identity for the consumer, workforce, or citizen. If you have anything to do with digital identity in your organization you should never miss this event.

There were numerous informative sessions this year. So what did I learn? Well, frankly, a lot. Here are my top three takeaways for Identiverse 2023:

Passkeys are Ready for Prime Time

I've written about Passkeys in a previous post. I'm very bullish on this technology and so were many speakers at Identiverse. The FIDO Alliance has managed to advance the standard to the point of wide industry adoption. The talks this year focused more on practical advice for implementation rather than tutorials on how Passkeys work. I believe the consensus that Passkey is ready for customer authentication applications alongside traditional username/password login methods. This means integrating invitations to users to set up Passkeys during login and password recovery flows, as well as when users view their profile screens, specifically their security settings.

Not convinced? Use your browser to navigate to g.co/passkey, sign-in with your personal Google Account and set up Passkey as your authentication for your Google account. Google Workspaces will be enabled with Passkey in the near future. Apple has released the ability to share Passkeys via iCloud Keychain across your devices and if you watched the WWDC 2023 Keynote they announced Passkey Sharing in MacOS, iPadOS, and iOS 17.

Verifiable Credentials and SSI are not yet ready for prime-time

Verifiable Credentials(VCs) and Self-Sovereign Identity(SSI) have been slowly making strides with standards and initial implementations but these new conventions of digital identity are not quite ready for practical applications yet. I'd say we are closer today to achieving initial use cases for Verifiable Credentials over full SSI.

Personally, I'm relieved to see these technologies decoupled from blockchain which I view as an unnecessary complication. Two sessions in particular seemed to sum up the state of things best. Jeremy Grant's titled "The Four Horsemen of the SSI Apocalypse" raised (surprise!) four problems with VCs and SSI that will need to be overcome. The majority of his points were centered on separating the hype from the reality. E.g. With respect to privacy, what's to stop Relying Parties (RPs) from collecting the VCs of anyone that uses them on their site. RPs have an incentive to collect as much data on you as possible. There is nothing special about VCs that will stop that type of harvesting.

The second talk was by Vittorio Bertocci titled "Verifiable Credentials for the Identity Practitioner". Vittorio's talk was focused on the practical technical issues related to implementing VC securely at scale. He laid out three major technical problems that will need to be solved. To be clear, these technologies are necessary and have a lot of power to enhance privacy. They put users back in control of key aspects of their digital identities. We need them. There is just more work to be done.

The Identity Community is Thriving

Identiverse 2023 was possibly the largest Identiverse in terms of attendance. It's clear this is the conference for a growing and very passionate group within the larger cybersecurity community. Most of us never chose to be identity professionals. We sort of stumbled into the field because no one was doing the job. However, once we rolled up our sleeves and dove into the work we realized we care deeply about seeing digital identity done right. We keep coming back to Identiverse year after year to connect with the community, to learn from each other, and to re-ignite that passion.

Take a look at Lance Peterman's talk titled "Lessons Learned from Lessons Taught". Lance and I, like so many others, share a genuine desire to teach and encourage a new generation of practitioners to take up the field. We need to grow our community and to see it re-invigorated with new faces and fresh ideas. Identiverse is one of the largest gatherings where that can happen. I'll be back next year. I can't wait to learn new things and see the continued growth of our community.

Winners and Losers in a Passkey Future

Passkey, the innovative password-less authentication method developed by the FIDO Alliance, holds the potential to disrupt the field of identity and access management by eliminating passwords once and for all. With its unique features, Passkey offers enhanced security and usability, enabling users to sign in to websites and apps effortlessly on any device. As this revolutionary technology takes hold, it's crucial to identify the winners and losers in this transformative landscape.

Passkey is a new password-less authentication method that is being developed by the FIDO Alliance. Passkey promises to eliminate passwords once and for all, and it has the potential to disrupt (in a good way) the field of identity and access management. So, if Passkey is going to disrupt our industry, who are the winners and losers going to be as Passkey replaces passwords in our day-to-day lives?

What is Passkey?

Passkeys are designed to be more secure and easier to use than passwords, and they can be used to sign in to websites and apps on any device. Passkeys work by generating a unique key pair for each website or app that a user signs in to. The public key is shared with the website or app, while the private key is stored on the user's device. When the user signs in, the website or app can use the public key to verify the user's identity without requiring them to remember and enter a password.

Passkey is a feature of the operating system and all major OS vendors support it. From the client system perspective, the latest versions of major operating systems and browsers from Apple, Microsoft and Google support Passkey. Passkey works equally well on desktop or mobile devices. Passkey does not require a physical device or additional software to be downloaded on a mobile phone. It cost consumers nothing to use but they do need to use the upgrade to the latest OS and browsers. Support for Passkeys does require changes to the server-side systems so websites (relying parties) will need to add support for their users to take advantage of this technology. Many sites already have announced support for Passkey. Notably, Google has recently enabled Passkey logins for personal and Workspace accounts.

Passkey technically meets the requirements of multi-factor authentication as the user authenticates to the site or relying party using something they have (private key) and that is often unlocked by a mechanism determined by the vendor OS (interaction with a mobile device or application, fingerprint, facial recognition, or inputting a PIN). So it's possible to consider Passkey as a replacement for MFA. According to the FIDO Alliance they are still working with regulators to have them accept Passkey as strong authentication that meets the needs of MFA.

So hopefully you are convinced that Passkeys are going to revolutionize how we authenticate to the myriad of web sites and apps we use every day. So who wins, who loses, and who should we watch out for as this revolution takes hold?

Winners

Consumers will clearly be the biggest beneficiaries of the benefits of Passkey. Consumers will now have a very strong, phishing-proof, way to sign into websites and applications. Passwords will all but be eliminated. Passkey will improve registration and sign-in user experience making it easier than ever for people to secure online access. They will simply not have to remember, write down, or otherwise manage multiple passwords for their online interactions.

Businesses that adopt Passkey will see benefits that derive from easier account sign up and password-less login experiences. E-commerce companies will benefit from much smoother checkout processes as the barrier to creating a secure account gets lower. Not to mention financial institutions will see an increase in security, lower incidents of account takeover, and fraud. Depending on the FIDO Alliance's work with regulators, Passkey logins meet MFA requirements raising the level of assurance of Passkey protected accounts.

Losers

The Bad Guys (criminals, phishers, etc) are going to lose big time as more users adopt Passkey. Passkey will make it nearly impossible to conduct a successful phishing attack as there isn't a way to steal a credential or MFA factor from an unwitting human. This should have a huge impact on account takeovers and fraud. Criminals that target consumers and their online accounts are going to lose passwords as an attack vector which will make hacking individual consumer accounts much more difficult.

Consumer-grade Password Manager vendors are going to struggle for relevance in a world with Passkey. As the OS vendors improve the user experience there will simply be less of a need for third party vendors to supply solutions to manage passwords. This will become particularly acute when Passkey becomes more widely available as a sign in option for more and more web sites. Password Managers may see increased usage in the enterprise but I'd place a fairly large bet of these vendors seeing their businesses dry up as Passkey becomes more popular.

Customer Identity and Access Management vendors that don't currently support or don't have plans to support Passkey are going to be in trouble. It won't be long before Passkey becomes part of the selection criteria for any company dealing with customer authentication. They will simply be eliminated from consideration.

Consumer websites and service providers who are slow to adopt Passkey could see drops in sign-ups and ultimately revenue. Customers are more likely to do business with companies that have friction free processes for sign-up in order to transact. Passkey will transform these interactions into password-less ones that just work. It will lower the cognitive load on consumers at purchase time because they won't suddenly be faced with complex choices between creating an account with email and password vs social provider login. They'll just be able to use Passkey. So service providers ignore Passkey at their own peril.

Wait and See

I'm not categorizing Enterprise Password-less Solution vendors just yet because I'm not exactly sure how things will pan out for this group. On the one hand they do stand to win since enterprises will want to provide password-less sign-on experiences for their workforce. This is especially true of vendors who can provide password-less login to legacy systems. On the other hand they might lose in the long run as companies adopt more modern systems and phase out legacy solutions precisely because they can't be secured with ubiquitous low cost solutions like Passkey.

There is no question Passkey will have an impact on customer access management. The FIDO Alliance and its member organizations have finally created a standard for strong authentication that eliminates the password. Furthermore they've managed to foster the wide adoption of Passkey with all OS and browser vendors. It's a technology that you can use today. We are definitely on the brink of major change. Change that is going to shake things up. I can't wait to see what unfolds and I can't wait to ditch all the passwords I'm using. I'll bet you will too.

The Next Frontier of Social Engineering: Generative AI

Generative AI, with its remarkable capabilities, has become a hot topic in today's discussions. Whether it's ChatGPT, Google Bard, or image generators like Stable Diffusion, the media and social platforms are filled with awe-inspiring descriptions of these tools. Undeniably, Generative AI holds immense potential to enhance productivity and augment human abilities. However, as we embrace these machine assistants, it's crucial to acknowledge their superpower: their ability to fabricate information.

You'd have to be living under a rock these days to not read an article about Generative AI, such as ChatGPT, Google Bard, or image generators like Stable Diffusion. Breathless descriptions of the powers of these tools permeate the literature and social media. To be fair, Generative AI is an extremely powerful new technology, and I believe it has quite a bit of promise to boost productivity and augment human potential. However, as we learn to live with our new machine assistants, I think it's also important to understand their superpower: they are really good at making things up. In fact, they are really good at making up things that sound really convincing, even if they are completely false. That's why I think one of the biggest threats they pose is helping attackers create novel content that will supercharge all sorts of social engineering and phishing attacks.

Phishing, spearfishing, and other social engineering attacks rely on human interaction to trick people into revealing sensitive information or taking actions that are harmful to themselves or their organization. Generative AI can be used to create realistic-looking social engineering attacks that are very difficult to detect. Many of these AI have been trained on text data obtained across the internet. A lot of this publicly available data can be leveraged by an adversary to create that perfect email, SMS, or watering hole website that will convince users to click a malicious link.

So, when faced with such a powerful mechanized onslaught, what's a poor human to do? Luckily, some of our existing controls will still thwart even the most convincing phishing attack.

Email filtering and malware detection will still work against malicious links, suspicious attachments, or embedded malware.

Strong phishing-resistant MFA or passwordless authentication is a great line of defense against social engineering attacks aimed at individual users or the service desk.

Good intelligence and even general awareness of the latest social engineering scams helps blunt the advantage adversaries may have in generating these convincing attacks.

Future countermeasures may include those based on detection of content created by Generative AI. These techniques could be used as more powerful filters in our email systems or browsers. They could help our human intuition in detecting machine-generated misinformation for the purposes of circumventing our security. Of course, it might also detect blog posts from lazy CTOs actually written by ChatGPT. Probably should have edited that out.

Solving the Challenges of an Identity Governance and Administration (IGA) Deployment

Hub City Media’s (HCM) experience helping clients achieve their IGA goals shaped our efforts to “build a better mousetrap”…

Identity Governance and Administration (IGA) has been part of the workforce reality for decades, but many organizations still struggle to escape the tedious, error-prone manual processes used to demonstrate basic compliance. Hub City Media’s (HCM) experience helping clients achieve their IGA goals shaped our efforts to “build a better mousetrap”.

Our goals were simple: make something easy to implement, smooth to operate and a pleasure to use.

We collaborate with clients everyday and find that governance programs usually fall into one of these broad categories:

Ad-hoc Manual - Having little to no governance in place, this client is unable to meet basic compliance requirements.

Multi-Spreadsheet - Governance cycle consists of a series of fractured, usually manual processes. Collecting data from disparate system extracts, massaging extracts into spreadsheets, distributing spreadsheets via email, following up with certifiers throughout the campaign, and ultimately ending with messy, manual remediation.

Quasi-automation - Usually either a set of scripts to process data input and / or remediation or spreadsheets are replaced with an online Governance tool that is fed access data and automates notifications for manual remediations.

Shelf-ware or unused IGA capabilities - We have seen cases of clients with existing IGA capabilities in-house that, due to complexity or cost, are underutilized or never deployed. Frustration with some IGA platform implementation effort and complexity may force clients to reevaluate their software vendor in order to realize their expected return on investment.

When solving these issues, clients often face one or more common challenges:

Designing IGA platforms without expertise

Implementing legacy processes

Insufficient planning and data modeling for modern IGA needs

Requirements based on current tools and products

Requiring heavy customization of new IGA tools and products

In designing and developing IGA tools and solutions, HCM focuses on simple, menu-driven usability over custom code. It’s a balancing act, but one we’ve found great success with when providing business value to our clients.

With these challenges in mind, here are some guiding principles to help increase successful IGA implementations. The below outlines an end to end approach we used to help a client successfully navigate through a set of typical IGA use cases.

Guidelines for IGA deployments:

1

Experienced IGA Project Team - Many organizations try to staff IGA projects with existing IT people. Oftentimes they may have little to no IGA experience. Subject Matter and product knowledge are critical requirements to planning, designing and building an IGA platform that meets security, compliance and usability requirements necessary, thus providing value to the organization.

IGA resources with specific knowledge can be difficult to acquire in the job market, so clients often engage System Integrators (like Hub City Media) for this expertise.

2

Build a strong foundation - As tempting as it is to “skip to the end” when reading a good story, it’s important to follow the natural progression to make that ending more meaningful and satisfying. This analogy holds true for IGA projects also. Of course it’s important to show value, but the challenge is to take the necessary time to build a winning strategy and then execute it. Short-changing this phase of the project often leaves the implementation team building on-the-fly without any real guidance or understanding of the end-goals for the business or impacts to end-users. Maintain discipline, educate stakeholders on IGA processes and terminology, align team members expectations and invest in a thorough requirements gathering and design with full participation from key stakeholders in IT and business roles. Agreeing on the “blueprint” will allow everyone to envision the endstate.

3

Adapt technology and business processes - Change is uncomfortable. Oftentimes initial conversations start off with clients asking to “automate our existing processes” or migrate from one product to another “without changing the experience.” While challenging, we advise taking a more strategic view. Look for opportunities for process improvement, re-align business and technology to take advantage of IGA standards, automation and native capabilities. Try not to to force an uncomfortable union between less compatible parts.

4

Define small wins - Aside from the pre-requisite infrastructure for deploying your IGA products, the next goal of your strategic planning efforts should be defining reasonable scope and schedule for addressing critical IGA needs, and delivering successful value to the business. Maintaining manageable objectives will help keep expectations in line with delivery timetables, helping to build confidence in the IGA platform and further driving adoption. Too often, especially in platform migrations, we see overambitious goals and lengthy project schedules that can be derailed by scope creep, misaligned expectations and an “all or nothing” success criteria.

5

Customize only when necessary and within supported frameworks - IGA standards have matured considerably over the last decade. Improved protocols and security, based on industry experience and analysis, have closed the gap between many IGA capabilities. While product vendors still offer some unique capabilities or approaches, especially in User Experience (UX), the focus has definitely emphasized standards over custom solutions. Following our advice on #3, Adaptation should be a first priority, but there are certainly situations that call for some enhancement or personalization of IGA product capabilities. We recommend a thorough examination of the underlying requirements and goals before choosing this approach, but in cases where it is deemed necessary, make sure to leverage the product vendor’s supported methods for achieving this advanced level of complexity. Always view the requirement in terms of perceived business value, time / cost to implement, maintainability, upgradeability and supportability. A decision made with these perspectives in mind are less likely to choose customization unnecessarily. In most every case, the outcome will be far more successful when choosing a standards-based approach, leveraging the selected technology tools capabilities as strengths rather than weaknesses.

6

Setting goals for the future - Building a strong foundation and achieving small wins helps lay the essential groundwork for some really exciting developments in modern Governance practices, namely adapting Artificial Intelligence and Machine Learning to drive value. These futuristic technologies are a current day reality and can provide several benefits to the IGA space, including:

Data-support of certification and access request decisions

Complete automation of low-risk decisions through business policies and rulesets

Enhanced security by identifying patterns and redundancies in Identity dataset(s)

We hope this exploration of our experience assisting and advising IGA projects provided valuable insights and tips to get you started, no matter where you are on the IGA journey.

CONTACT US for an introductory meeting with one of our IGA experts, where we can apply this information to your unique organization.

Deploying Identity and Access Management (IAM) Infrastructure in the Cloud - PART 4: DEPLOYMENT

Explore critical concepts (planning, design, development and implementation) in detail to help achieve a successful deployment of ForgeRock in the cloud. In Part 4 of the series, we discuss applying all of the hard work we’ve done on research, design and development to deploy cloud infrastructure on AWS.

Blog Series: Deploying Identity and Access Management (IAM) Infrastructure in the Cloud

Part 4: DEPLOYMENT

Standing Up Your Cloud Infrastructure

In the previous installment of this four part series on “Building IAM Infrastructure in the Public Cloud,” we discussed the development stage, where we created the objects and automation used to implement the cloud infrastructure design.

In part 4, we discuss applying all of the hard work we’ve done on research, design and development to deploy cloud infrastructure on AWS. Before moving forward, you might want to refer back to the previous installments to review the process.

Part 1 - PLANNING

Part 2 - DESIGN

Part 3 - TOOLS, RESOURCES and AUTOMATION

Prerequisites

It is assumed at this point that:

You have an active AWS account

You have an IAM account in AWS with administrative privileges

You have confirmed that your AWS resource limits in the appropriate region(s) are sufficient to deploy all resources in your design

Your deployment artifacts such as cloudformation templates, shell scripts and customized config files have been staged in an S3 bucket in your account, code repository, etc.

Any resources that your cloudformation template has a dependency on will be in place before execution. For example, EC2 instance profiles, key pairs, AMI’s etc have been created or are otherwise available.

You have a list of parameter values ready, e.g.VPC name, VPC CIDR block, and the CIDR block(s) of external network(s) for your routing tables (essentially anything that your cloudformation template expects as input).

You have been incrementally testing cloudformation templates and other deployment artifacts as you’ve been creating them, are satisfied they are error free, and confident they are in a state that will achieve desired results

Goals

Goals at this stage:

Deploy the cloud infrastructure

Validate the cloud network infrastructure

Deploy EKS

Integrate the cloud infrastructure with the on-prem network environment

Configure DNS

Prepare for the Forgerock implementation to commence

1 - Deploy the cloud infrastructure

A new Cloudformation stack can be created from the web interface. From this interface, you can specify the location of your template and enter parameter values. The parameter value fields, descriptions and constraints will vary between implementations, and are defined in your template.

As the stack is building, you can monitor the resources being created real-time. The events list can be quite lengthy, especially if you have created a template that supports multiple environments. That said, the template deployment can complete in a matter of minutes, and is successful if the final entry with the stack name listed in the Logical ID field returns a status of ‘CREATE_COMPLETE’.

Any other status indicates failure, and depending on the options selected when you deployed the stack, it may automatically roll back the resources that were created or leave them in place to help in debugging your template.

After all the work it took to get to this point, it might come as a surprise how quickly and easily this part of your deployment can be completed. By the same token, it also needs to be pointed out how quickly and easily it can be taken down--potentially by accident--without proper access controls and procedures in place.

At the very least, we recommend that “Termination Protection” on the deployed stack be set to “enabled”. While it will not prevent someone with full access to the Cloudformation service to intentionally delete the stack, it will create the extra step of having to disable termination protection during the deletion process, and that could in some cases be enough to avert disaster.

2 - Validate the cloud network infrastructure

The list of items to check may change depending on your design, but in most cases, you should go thought your deployment and validate:

Naming conventions and other tags / values on all resources

Internet gateway

NAT gateways

VPC CIDR, subnets, availability zones, routing tables, security groups and ACLs

Jump box instances (and your ability to SSH to them, starting with a public facing jump box if you’ve created one)

Tests of outbound internet connectivity from both public and private subnets

EC2 instances such as EKS console instances (discussed shortly), and instances that will be used to deploy DS components that are not containerized

If applicable, the AWS connection objects to the on-prem network, like VPN gateways, customer gateways and VPN connections

3 - Deploy EKS

One approach we’ve used to deploy EKS clusters is to create an instance during the cloudformation deployment of the VPC that has tools like kubectl, and seed it with scripts / configuration files that are VPC specific. We call this an “EKS console”. During the VPC deployment, parameters are passed to a config file on the instance via the userdata script on the EC2 object, which specifies deployment settings for the associated EKS cluster.

After the VPC is deployed, the EKS console instance serves the following purposes:

You can simply launch a shell script from it that will execute AWS CLI commands, properly deploying the cluster to correct subnets, etc.

You can manage the cluster from this instance as well as launch application deployments, services, ingress controllers, secrets, etc.

If you’ve taken a similar approach, you can now launch the EKS deployment script. When it completes, you can test out connecting to and managing the cluster.

4 - Integrate the cloud infrastructure with the on-prem network environment

If your use case includes connectivity to an on-prem network, you have likely already created or will shortly create the AWS objects necessary to establish connection.

If, for example, you are creating a point-to-point VPN connection to a supported internet-facing endpoint on the on-prem network, you can now generate a downloadable config file from within the AWS VPC web interface. This file contains critical device specific parameters, in addition to encryption settings, endpoint IPs on the AWS side, and pre-shared keys. This file should be shared with your networking team in a secure fashion.

While the file has much of the configuration pre-configured, it is incumbent on the networking team to make any necessary modifications to it before applying it to the on-prem VPN gateway. This is to ensure that the configuration meets your use case, and does not adversely conflict with other settings already present on the device.

Once your networking team has completed the on-prem configuration, and you have once again confirmed routing tables in the VPC are configured correctly, the tunnel can be activated by initiating traffic from the on-prem side. After an initial time-out, the tunnel should come up and traffic should be able to flow. If the VPN and routing appears to be configured correctly on both sides, and traffic still is not flowing, firewall settings should be checked.

5- Configure DNS

One frequent use case we encounter is the need for DNS resolvers in the VPC to be able to resolve domain names that exist in zones on private DNS servers in the customer’s on-prem network. This sometimes includes the ability for DNS resolvers on the on-prem network to resolve names in Route53 private hosted zones associated with the VPC.

Once you have successfully established connectivity between the VPC and the on-prem network, you can now support this functionality via Route53 “inbound endpoints” and “outbound endpoints”. Outbound endpoints are essentially forwarders in Route53 that specify the on-prem domain name and DNS server(s) that can fulfill the request. Inbound endpoints provide addresses that can be configured in forwarders on the on-prem DNS servers.

If after configuring these forwarders and endpoints resolution is not working as expected, you should check your firewall settings, ACLs, etc.

6- Prepare for the ForgeRock implementation to commence

Congratulations! You’ve reached the very significant milestone of deploying your cloud infrastructure, validating it, integrating it with your on-prem network, and perhaps adding additional functionality like Route53 resolver endpoints. You have also deployed at least one EKS cluster, presumably in the Dev environment (if you designed your VPC to support multiple environments), and you are prepared to deploy clusters for the other lower environments when ready.

It’s now time to bring in your developers / Identity Management team so they can proceed with getting Forgerock staged, tested and implemented.

Demo your cloud infrastructure so everyone has an operational understanding of what it looks like and where applications and related components need to be deployed

Utilize AWS IAM as needed to create accounts that only have the privileges necessary for team members to do their jobs. Some team members may not even need console or command line API access at all, and only will need SSH access to EC2 resources.

Be prepared to provide cloud infrastructure support to your team members

Start planning out your production cloud infrastructure, as you now should have the insight and skills needed to do it successfully

Next Steps

In this fourth installment of “Building IAM Infrastructure in Public Cloud,” we’ve discussed performing the actual deployment of your cloud infrastructure, validating it, and getting it connected to your on-prem network.

In future blogs, we will explore the process of planning and executing the deployment of ForgeRock itself into your cloud infrastructure. We hope you’ve enjoyed this series so far, and that you’ve gained some valuable insights on your way to the cloud.

CONTACT US for an introductory meeting with one of our networking experts, where we can apply this information to your unique system.

Deploying Identity and Access Management (IAM) Infrastructure in the Cloud - PART 3: TOOLS, RESOURCES and AUTOMATION

Explore critical concepts (planning, design, development and implementation) in detail to help achieve a successful deployment of ForgeRock in the cloud. In Part 3 of the series, we take a closer look at tools, resources and automation to help you setup for implementation…

Blog Series: Deploying Identity and Access Management (IAM) Infrastructure in the Cloud

Part 3: TOOLS, RESOURCES and AUTOMATION

In the previous installment of this four part series on “Building IAM Infrastructure in the Public Cloud,” we discussed core cloud infrastructure components and design concepts.

In this third installment, you move into the development stage, where you take your cloud infrastructure design and set up the objects and automation that will be used to implement it.

Deployment Automation Goals and Methodology

At this stage of deploying our infrastructure, you should have a functional design of the cloud infrastructure that, at minimum, includes:

AWS account in use

Region you are deploying into

VPC architectural layouts for all environments, including CIDR blocks, subnets, and availability zones

Connectivity requirements to the internet, peer VPCs, and on-prem data networks

Inventory of AWS compute resources used

List of other AWS services, how they are being used, and what objects need to be created

List and configuration of security groups and access control lists required

Clone of the Forgeops 6.5.2 repo from github

Deployment Objectives

The high-level objectives for proceeding with your deployment are as follows:

Create an S3 bucket to store Cloudformation templates

Build and execute Cloudformation templates to deploy the VPC hosting the lower environments and the production environment, their respective EKS console hosts, and additional EC2 resources as needed per your specific solution

Deploy EKS clusters from the EKS console hosts via the AWS CLI

Deploy EKS cluster nodes from the EKS console hosts via the AWS CLI

A Note on the Forgeops Repo

The Forgeops repo contains scripts and configuration files that serve as a starting point for modeling your deployment automation. It does not provide a turnkey solution for your specific environment or design and must be customized for your particular use case. Since this repo supports multiple cloud platforms, it is also important to distinguish the file naming conventions used for the cloud platform you are working with. In our scenario, “eks” either precedes or is a part of each filename that relates to deploying AWS VPCs and EKS clusters. Take some time to familiarize yourself with the resources in this repo.

Building the VPC Template

VPC templates will be created using AWS Cloudformation using your VPC architecture design and an inventory of VPC components as a guide. Components will typically include:

Internet gateway

Public and private facing subnets in each availability zone

NAT gateways

Routing tables and associated routes

Access control lists

Security groups, including the cluster control plane security groups

Private and or public jump box EC2 instances

EKS console instances

Additional ForgeRock related EC2 instances

S3 endpoints

Template Structure

While an extensive discussion on Cloudformation itself is beyond the scope of this text, a high level overview of some of the template sections will help get you oriented:

JSON formatted Cloudformation template; YAML format is also supported

The AWSTemplateFormatVersion section specifies the template version that the template conforms to.

The Description section provides a space to add a text string that describes the template.

The Metadata section contains objects that provide information about the template. For example this section can contain configuration information for how to present an interactive interface where users can review and select parameter values defined in the template.

The Parameters section is the place to define values to pass to your template at runtime. For example this can include values such as the name of your VPC, the CIDR block to use for it, keypairs to use for EC2, and virtually any other configurable value of EC2 resources that can be launched using Cloudformation.

The Mappings section is where you can create a mapping of keys and associated values that you can use to specify conditional parameter values, similar to a lookup table.

The Conditions section is where conditions can be defined that control whether certain resources are created or whether certain resource properties are assigned a value during stack creation or update.

The Resources section specifies the stack resources and their properties, like VPCs, subnets, routing tables, EC2 instances, etc.

The Outputs section describes the values that are returned whenever you view your stack's properties. For example, you can declare an output for an S3 bucket name which can then be retrieved from the AWS CLI.

Cloudformation Designer

AWS Cloudformation provides the option of using a graphical design tool called Cloudformation Designer that dynamically generates the JSON or YAML code associated with AWS resources. The tool utilizes a drag and drop interface along with an integrated JSON and YAML editor, and is an excellent way to get your templates started while creating graphical representations for your resources. Once you’ve added and arranged your resources to the template, you can download your template for more granular editing of the resource attributes, as well as add additional scripting to the other sections of the template.

High-level view of Non-Production VPC. Supports individual environments for Development, Testing and Performance Testing, as well as shared infrastructure resources

High-level view of Production VPC with a single Prod environment and infrastructure resources

Closer view of subnet objects. These are private subnets, and are arranged by availability zone. The two subnets on the left are in AZa, the two in the center are in AZb, and the two on the right are in AZc.

Closer view of one component in the template, which in this case is a security group that will be used during the deployment and operation of the EKS cluster for our Test environment. Note: the JSON code that defines this object in the bottom pane, and the visual connections that illustrate relationships to other objects.

The same object with code in YAML format

EKS Console Instances

The EKS console EC2 instances will be used to both deploy and manage the EKS clusters and cluster nodes after the VPC deployment itself has completed. Each environment and its respective cluster will have its own dedicated console instance.

Each console instance will host shell scripts that invoke the AWS CLI to create an EKS cluster, and launch a cloudformation script to deploy the worker nodes. The Forgeops repo contains sample scripts and configuration files that you can use as a starting point to build your own deployment automation. The eks-env.cfg file in particular provides a list of parameters for which values need to be provided. These parameters include, for example, the cluster name, which subnets the worker nodes should be deployed on, the number of worker nodes to create, the instance types and storage sizes of the worker nodes, the ID of the EKS Optimized Amazon Machine Image to use, etc. These values can be added manually after the VPC and the EKS console instance is created, or the VPC Cloudformation template can be leveraged to populate these values automatically during the VPC deployment.

Prerequisites

Your EKS console instances will require the following software to be installed before launching the installation scripts:

git, if you need to clone your scripts and config files from a git repo

Forgeops scripts can be used to install these prerequisites as well as additional utilities like helm, or you can prepare your own scripts.

To avoid the need for adding AWS IAM account access keys directly to the instance, it is recommended to utilize an IAM instance profile to provide the necessary rights to deploy and manage EKS and EC2 resources from the CLI.

A key pair will need to be created for use with the EKS worker nodes

A dedicated security group needs to be created for each EKS cluster

An EKS Service IAM Role needs to be created in IAM

Next Steps

In this third installment of “Building IAM Infrastructure in Public Cloud,” we’ve discussed building the automation necessary to deploy your AWS VPCs and EKS Clusters. In Part 4, we will move forward with deploying your VPCs, connecting them to other networks such as your on-prem network and / or peer VPCs, and finally deploy your EKS clusters.

CONTACT US for an introductory meeting with one of our networking experts, where we can apply this information to your unique system.

Deploying Identity and Access Management (IAM) Infrastructure in the Cloud - PART 2: DESIGN

Explore critical concepts (planning, design, development and implementation) in detail to help achieve a successful deployment of ForgeRock in the cloud. In Part 2 of the series, we take a closer look at core cloud infrastructure components and design concepts…

Blog Series: Deploying Identity and Access Management (IAM) Infrastructure in the Cloud

Part 2: DESIGN - Architecting the Cloud Infrastructure

In Part 1 of this series, we discussed:

Overall design

Cloud components and services

“Security of the Cloud” vs. “Security in the Cloud”

Which teams from within your organization to engage with on a cloud initiative

In Part 2, we will take a closer look at core cloud infrastructure components and design concepts.

For a visual deep dive into Running IAM using Docker and Kubernetes, check out our webinar with ForgeRock.

The VPC Framework

VPCs

The “VPC” or “Virtual Private Cloud” represents the foundation of the cloud infrastructure where most of our cloud resources will be deployed. It is a private, logically isolated section of the cloud which you control, and can design to meet your organization’s functional, networking and security requirements.

At a minimum, one VPC in one of AWS’s operating regions will be required.

Regions and Availability Zones

AWS VPCs are implemented at the regional level. Regions are separate geographic areas, and are further divided into “availability zones” which are multiple isolated locations linked by low-latency networking. VPCs should generally be designed to operate across at least two to three availability zones for high availability and fault tolerance within a region.

Interconnectivity

When you are in the early stages of your design, it will be helpful to ascertain what kind of interconnectivity you will need. A VPC will more than likely need to be connected to some combination of other networks, such as the internet, peer VPCs and on-prem data centers. This needs to be considered in your design.

Public-facing resources that need to be accessible from the internet will require publicly routable IP addresses, and must reside on subnets within your VPC that are configured with a direct route to the internet. For resources that do not directly face the internet but need outbound internet access, one or more internet facing NAT Gateways can be added to the VPC.

Establishing connectivity to other VPCs either in the same or different AWS account can be achieved through a) “VPC Peering”, which establishes point-to-point connections, or by b) implementing a “Transit Gateway”, which uses a hub and spoke model.

Reference Architecture for Identity and Access Management (IAM) cloud deployment (select to expand)

Connecting VPCs and on-prem networks also presents some options to consider. Point-to-point IPSec VPN tunnels can be created between VPCs and on-prem terminating equipment capable of supporting them. A Transit Gateway can also be used as an intermediary, where the VPN Tunnels can be established between the on-prem network and the Transit Gateway, and the VPCs connected via “Transit Gateway Attachments.” This will reduce the number of VPN tunnels you need to support when integrating multiple VPCs. AWS “Direct Connect” is also available as an option where VPN connections are insufficient for your needs.

VPC IP Address Space and Subnet Layout

IP address space of VPCs connected to existing networks needs to be planned carefully. It must be sized to support your cloud infrastructure without conflicting with other networks or consuming significantly more addresses than you need. Each VPC will require a CIDR block that will cover the full range of IP subnets used in your implementation. Determining the proper size of CIDR blocks will be possible once the functional design of the cloud infrastructure is further along.

VPC Security and Logging

In keeping with the principle of “Security in the Cloud,” AWS provides a comprehensive set of tools to help secure your cloud environment. At a minimum, “Network ACLs” and “Security Groups” need to be configured to establish and maintain proper firewall rules both at the perimeter and inside of your VPC.

Other AWS native services can help detect malicious activity, mitigate attacks, monitor security alerts and manage configuration so it remains compliant with security requirements. It is also possible to capture and log traffic at the packet level on subnets and network interfaces, and log cloud API activity. You should take the opportunity to familiarize yourself with and utilize these services.

Some organizations may also have requirements to integrate 3rd party security tools, services, appliances, etc. with the cloud infrastructure.

EKS

ForgeRock solutions can be implemented as containerized applications by leveraging “Kubernetes” - an open-source system for automating the deployment, scaling and management of containerized applications.

“EKS” is Amazon’s “Elastic Kubernetes Service.” It is a managed service that facilitates deploying and operating Kubernetes as part of your AWS cloud infrastructure. From a high-level infrastructure perspective, a Kubernetes cluster consists of “masters” and “nodes.” Masters provide the control plane to coordinate all activities in your cluster, such as scheduling application deployments, maintaining their state, scaling them and rolling out updates. Nodes provide the compute environment to run your containerized applications. In a cluster that is deployed using EKS, the worker nodes are visible as EC2 instances in your VPC. The master nodes are not directly visible to you and are managed by AWS.

An EKS cluster needs to be designed to have the resources needed to effectively run your workloads. Selection of the correct EC2 instance type for your worker nodes will depend on your particular environment. ForgeRock provides general guidance in the ForgeOps documentation to help in this process based on factors like the number of user accounts that will be supported; however, the final selection should be determined based on the results of performance testing before deploying into production.

While Kubernetes will automatically maintain the proper state of containerized applications and related services, the cluster itself needs to be designed in a manner that will be self-healing and recover automatically from infrastructure related failures, such as a failed worker node or even a failed AWS availability zone. Implementing Kubernetes in AWS is accomplished by deploying nodes in a VPC across multiple availability zones, and leveraging AWS Auto Scaling Groups to maintain or scale the appropriate number of running nodes.

It should be noted that EKS worker nodes can consume a large number of IP addresses. These addresses are reserved for use with application deployments and assigned to Kubernetes pods. The number of IP addresses consumed is based on the EC2 instance type used, particularly with respect to the number of network interfaces the instance supports, and how many IPs can be associated with these interfaces. Subnets hosting worker nodes must be properly sized to accommodate the minimum number of worker nodes you anticipate running in each availability zone. These subnets must also have the capacity to support additional nodes driven by events such as scaling out (due to high utilization), or the failure of another availability zone used by the VPC.

Other EC2 Resources

Your VPC may need to host additional EC2 resources, and this will be a factor in your capacity planning.

ForgeRock Instances

Some ForgeRock solutions will require additional EC2 instances for ForgeRock components beyond what is allocated to the Kubernetes cluster. For example, these could include DS, CS, CTS, User, Replication and Backup instances depending on your specific design.

Utility Instances

Other instances that will likely be a part of your deployment include jump boxes and management instances. Jump boxes are used by systems admins to gain shell access to instances inside the VPC. They are particularly useful when working in the VPC before connectivity to the on-prem environment has been established. Management instances, or what we tend to refer to as “EKS Consoles” are used for configuring and launching scripted deployments of EKS as well as providing a command line interface for managing the cluster and application deployments. Additional tools such as Helm or Skaffold are commonly installed here.

Load Balancers

Applications deployed on EKS are commonly exposed outside the cluster via Kubernetes services, which launch AWS Load Balancers (typically Application Load Balancers). ALBs can be configured as public (accessible from the internet) or private. Both types may be utilized depending on your design. Application load balancers should be deployed across your availability zones, and each subnet that ALBs are assigned to currently must have a minimum of eight free IP addresses to launch successfully. An ALB uses dynamic IP addressing and can add or reduce the number of network interfaces associated with it based on workload, therefore it should always be accessed via DNS.

Global Accelerator

AWS recently introduced a service called “Global Accelerator.” This service provides two publicly routable static IP addresses that are exposed to the internet at AWS edge locations, and can be used to front-end your ALBs or EC2 instances. This service can enhance performance for external users by leveraging the speed and dependability of the AWS backbone.

It also can be used to redistribute or redirect traffic to different back-end resources without requiring any changes to the way users connect to the application, e.g. in blue / green deployments or in disaster recovery scenarios. When external clients require static IP addresses for white listing purposes, this service addresses the ALB’s lack of support for static addresses. Furthermore, the global accelerator has built-in mitigation for DDOS attacks. The global accelerator should be associated with subnets in each availability zone in your VPC, and will consume at least one IP address from each of these subnets.

DNS

Route 53 is AWS’s DNS service. It can host zones that are publicly accessible from the internet as well as private hosted zones that are accessible within your cloud environment. In some situations it may be desirable for systems in on-prem and / or peer VPCs to be able to perform name resolution in zones hosted by on-prem DNS servers. Conversely, there are situations where on-prem systems need to be able to resolve names in your private hosted zones in Route 53. This can be achieved by establishing inbound and/or outbound Route 53 resolver endpoints in your VPC, as well as configuring the appropriate zone forwarders. Resolver endpoints should be associated with subnets in each availability zone in your VPC, and will consume at least one IP address from each of these subnets.

Environments

A significant element of planning your cloud infrastructure is determining the types of environments you will need and how they will be structured.

For non-production, you may want to create separate lower environments for development, code testing and load / performance testing. Depending on your preferences or specific needs, these environments can share one VPC or have their own dedicated VPCs. In a shared VPC, these environments can be hosted on the same EKS cluster using different namespaces, or have their own dedicated EKS clusters.

For your production environment, you may want to implement a blue / green deployment model. This also presents choices, such as whether to run entirely separate cloud infrastructure for each, or whether to have shared infrastructure where the EKS cluster is divided into blue and green namespaces.

Again, these choices depend on your preferences and specific requirements, but your operating budget for running a cloud infrastructure will also influence how much cloud infrastructure you will want to build and operate. The more infrastructure that is shared, the less your ongoing operating costs will be.

Putting It All Together

Preparing Cost Estimates For Your Cloud Infrastructure

By now you should be developing a clearer picture of what your cloud infrastructure will look like and be better situated to put together a high-level design. The next step is to take an inventory of the components in your high-level design and enter this information into the various budgeting tools AWS makes available to you, like the Simple Monthly Calculator and TCO calculator. This step will provide you with estimates of your cloud infrastructure operating expenses and help you determine if the cost of what you have designed is consistent with your budget.

If costs come in higher than anticipated, there are approaches you can take to reduce your expenses. As previously discussed, sharing some infrastructure can help; for example, consolidating your lower environments into a single VPC if that is feasible. Since EC2 instances generally represent the largest percentage of your operating costs, exploring the use of “reserved instances” would also present cost savings opportunities once you are closer to finalizing design and are prepared to commit to using specific instance types over an agreed time period.

VPC IP Address Space and Subnet Layout – Part 2

We’ve already talked briefly about the criticality of planning your IP address space carefully. Now we will put this into practice.

Once you have prepared a high-level design that meets your functional and budgetary requirements, the next step is to identify the networking requirements of each component; e.g. the number of IPs EKS worker nodes will consume, or how many IPs are needed to always be available on a subnet for an Application Load Balancer to successfully be deployed.

Another factor to consider is the number of IP addresses reserved on each subnet by the cloud provider. On AWS, five addresses from each subnet are reserved and unavailable for your use. These factors, along with the number of availability zones you will be deploying into will drive the number and size of the subnets within each VPC.

You should now have the basis for determining the CIDR block sizes needed to build your cloud infrastructure as designed, and can work with your organization’s networking team to have them allocated and assigned to you.

Anecdotally, we have encountered situations where clients could not obtain the desired size CIDR blocks from their organization and had to scale back their cloud infrastructure design to meet the constraint of using smaller CIDR blocks. Engaging your networking team early in the design process will help identify if this is a potential risk and help you to more efficiently work through any IP address space constraints.

Next Steps

In this installment, we’ve provided you with detailed insight into what goes into designing public cloud infrastructure to host your ForgeRock implementation. In the next part of this series, we will move into the development phase, including tools, resources and automation that can be leveraged to successfully deploy your cloud infrastructure.

Next Up in the Series: DEVELOPMENT - Tools, Resources and Automation

Deploying Identity and Access Management (IAM) Infrastructure in the Cloud - PART 1: PLANNING

Explore critical concepts (planning, design, development and implementation) in detail to help achieve a successful deployment of ForgeRock in the cloud. Part 1 of the series delves into planning considerations organizations must make when deploying Identity and Access Management in the cloud…

Blog Series: Deploying Identity and Access Management (IAM) Infrastructure in the Cloud

In a 2018 blog post, we explored high-level concepts of implementing ForgeRock Identity and Access Management (IAM) in public cloud using Kubernetes and an infrastructure as code approach for the deployment. Since then, interest from our clients has increased substantially with respect to cloud-based deployments. They seek to leverage the benefits of public cloud as part of their initiative to modernize their Identity and Access Management (IAM) systems.

Essential details to consider when using cloud-based technology infrastructure:

Planning (PART 1)

Design (PART 2)

Development (PART 3)

Implementation (PART 4)

Reference Architecture for Identity and Access Management (IAM) cloud deployment (select to expand)

In this blog series, we focus on exploring each of these concepts in greater detail to help you achieve a successful deployment of ForgeRock Identity and Access Management (IAM) in the cloud. While we will be referencing AWS as our cloud provider, the overall concepts are similar across other cloud providers regardless of the differences in specific tools, services or methods associated with them.

Part 1: PLANNING

Organizational Considerations

Given the power and capabilities of cloud computing, it is theoretically possible to design and build much of the entire platform with minimal involvement from other groups within your organization; however, in many cases, and particularly with larger companies, this can lead to significant conflicts and delays when it is time to integrate your cloud infrastructure with the rest of the environment and put it into production.

The particulars vary between organizations, but here are suggestions of who to consult during the planning phase:

Network Engineering team

Server Engineering team

Security Engineering team

Governance / Risk Management / Compliance team(s)

These discussions will help you identify resources and requirements that will be material to your design.

For example, the Networking team will likely assign the IP address space used by your virtual private clouds and work with you to connect the VPCs with the rest of the networking environment.

The Server Engineering team may have various standards, like preferred operating systems and naming conventions, that need to be applied to your compute instances.

The Security Engineering and Risk Management teams will likely have various requirements that your design needs to comply with as well.

One or more of these teams can also help you to identify common infrastructure services, such as internal DNS and monitoring systems that may be required to be integrated with your cloud infrastructure.

Finally, your organization might already utilize public cloud and have an existing relationship with one or more providers. This can potentially influence which cloud provider you choose to move forward with, and the internal team(s) involved should be able to assist you with properly establishing a provider account or environment for your initiative.

Infrastructure Design Goals

Despite the absence of having to build a physical data center, planning cloud-based technology infrastructure has many similar requirements. For example:

Defining each environment needed for both production and lower environments

Identifying the major infrastructure components and services required and properly scaling them to efficiently service workloads of each respective environment

Designing a properly sized, highly available, fault tolerant network infrastructure to host these components and services

Providing internet access

Integrating with other corporate networks and services

Implementing proper security practices

Identifying and satisfying corporate requirements and standards as they relate to implementing technology infrastructure

Leveraging appropriate deployment tools

Controlling costs

Other important characteristics that should be considered early in the process include deciding if you will be deploying into a single region or multiple regions, and whether or not you plan to utilize a blue / green software deployment model for your production environment. Both will have implications on your capacity and integration planning.

Security

Shared Responsibility Model

There are two primary aspects of cloud security:

“Security of the Cloud”

“Security in the Cloud”

Cloud providers like AWS are responsible for the former, and you as the customer are responsible for the latter. Simply stated, the cloud provider is responsible for the security and integrity of the underlying cloud services and infrastructure, and the customer is responsible for properly securing the objects and services deployed in the cloud. This is accomplished using tools and controls provided by the cloud service provider, applying operating system and application level patches, and even leveraging third party tools to further harden security. Furthermore, as a best practice, your architecture should limit direct internet exposure to the systems and services that need it to function.

See AWS Shared Responsibility Model for more information.

Cloud Components and Services

Each function in the cloud architecture is dependent on a number of components and services, many of which are offered natively by the cloud provider. Keep in mind that specific implementations will vary and you can use alternatives for some functions if they are better fit for your organization. For example, for code collaboration and version control repositories, you can use AWS’s CodeCommit, or a third party solution like GitLab if you prefer. For deploying infrastructure using an “infrastructure as code” approach, you can use AWS’s Cloud formation, or alternatively Hashicorp’s Terraform. On the other hand, there are cloud provider components and services that must be utilized, like AWS’s VPC and EC2 services which provide networking and compute resources respectively. The following is a summary of some of the components and services we will be using. If you are relatively new to AWS, it would be helpful to familiarize yourself with them:

Amazon VPC

Provides the cloud based networking environment and connectivity to the internet and on-prem networks

Amazon EC2

Provides compute resources like virtual servers, load balancers, and autoscaling groups that run in the virtual private cloud

Amazon Elastic Kubernetes Service

Managed kubernetes service that creates the control plane and worker nodes

AWS Global Accelerator

Managed service for exposing applications to the internet

Amazon Route 53

Cloud based DNS service

Amazon Simple Storage Service

Object storage service

Amazon Elastic Container Registry

Managed Docker Container Registry

AWS CodeCommit

Managed source control service that hosts git-based repositories

AWS Certificate Manager

Service for managing public and private SSL/TLS certificates

AWS Cloudformation

Scripting environment for provisioning AWS cloud resources

AWS Identity and Access Management

Provides fine-grained access control to AWS resources

Amazon CloudWatch

Collects monitoring and operational data in the form of logs, metrics, and events.

AWS Support and Resource Limits

Support

As you work through the design and testing process, you will invariably encounter issues that can be resolved more quickly if you have a paid AWS support plan in place that your team can utilize.

You can review the available support plans for more information to determine which plan is right for you.

Resource Limits

While it is still early in the process to determine the number and types of cloud resources you will need, one aspect you will need to plan ahead for is managing resource limits. The initial limits that are granted to new AWS accounts for certain resource types can have thresholds that are far below what you will need to deploy in an enterprise environment.

Furthermore, the newer the account, the longer it can take for AWS to approve requests for limit increases, and this can adversely impact your development and deployment timelines. Establishing a relationship with an AWS account manager and familiarizing them with your initiative can help expedite the process of getting limit increases approved in a more timely fashion until the account has some time to build a satisfactory payment and utilization history.

Next Steps

We’ve covered several aspects of the planning process for building your IAM infrastructure in Public Cloud. In the second installment of this four part series, we will explore design concepts, including the architecture for the VPCs, EKS clusters and integration with on-prem networks.

For more content on deploying Identity and Access Management (IAM) in the cloud, check out our webinar series with ForgeRock: Containerized IAM on Amazon Web Services (AWS)

Part 1 - Overview

Part 2 - Deep Dive

Part 3 - Run and Operate

OAuth: Beyond the Standard

Hub City Media highlights three ways we’ve taken standard OAuth functionality and added value by combining it with other technology…

OAuth is a standard granting a server authorization to access protected data on another server. As there are a myriad of places on the internet to explain ‘how’ it works or ‘how’ to set it up, we will not discuss that in this post. Rather, we will highlight how it’s commonly used by our clients as part of efforts to modernize IAM solutions. OAuth is rarely the complete picture, as clients need more than a standard ‘off the shelf’ implementation to meet business objectives.

Below, we’ll highlight three ways we’ve taken standard OAuth functionality and added value by combining it with other technology.

Client Credentials

Flow

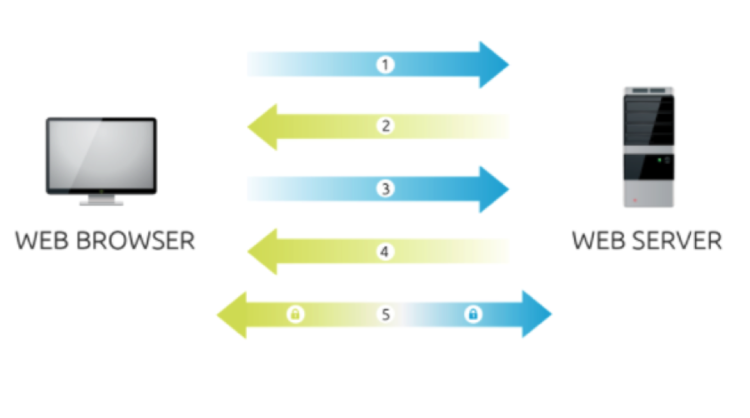

A client credentials grant flow is a basic OAuth use case. In the typical client credentials case, the authorization server is allowing the client to access the resource server without any end user interaction. As such, there is no consent flow.

The client begins by requesting an access token from the authorization server using the client id (a publicly exposed string used by the service API to identify the application) and secret (the key stored on server side securely and should not be publicly available). Once granted, the client presents the access token to the resource server as part of a resource request. The resource service validates it against the authorization server, ensuring it has the scope necessary for the request. If valid, the resource server responds to the request.

Customizations

An organization wanted to use this design to authorize applications to access APIs; however, they didn’t want to place the burden of access token validation onto each application. To facilitate, we added an XML gateway to handle the resource-to-scope mapping and token validation.

Unfortunately, this wasn’t enough to meet requirements. Management of the client id, secret and scopes (a mechanism to limit an application's access to a user's account) was still done within the access manager (AM) platform and would have required a separate team from the organization to be involved in each deployment.

To remove this hurdle, a custom client plugin allowed AM to interface with an existing Lightweight Directory Access Protocol (LDAP), effectively delegating authentication and authorization administration. With this in place, AM was now part of the organization’s existing process for creating and authorizing service accounts. This removed duplicate work during deployments and provided a scalable framework for centralized service account management to APIs!

Resource Owner

Flow

In the resource owner grant flow, the client does not use its individual id and secret to connect. Instead, it uses the end user’s credentials. This flow continues like the client credentials flow (shown above) after this point. When the client receives an access token for the resource server, the access token is tied to the authenticating user (rather than the client application shown in the previous example). Typically, we do not recommend using the resource owner grant flow as it provides a user’s username and password to a client directly, which creates numerous security concerns.

Customizations